With the evolution Data & Analytics consumption, our lives has become full of data. From ordering food 🍔🍕🍰 to checking the delivery status, from binge watching📺👀, to online shopping 🛒 we are always producing and consuming data.

Long gone are the days of statis MIS reports and dependency on the IT teams for getting those reports. Even the prebuilt interactive reports are also not adequate and flexible enough for the data hungry workforces. Business users want empowerment 💪, to to create their own insights, or in other words self-service capability.

Good for us that the current modern BI tools such as Power BI, Tableau, Qlik are quite capable to handle such self-service capability. But with freedom comes responsibility, so the challenges of self-service BI are as,

- Standard - The organizational standards in terms of metrics definition, dimensional attributes, nomenclatures, UI etc. need to be consistent across.

- Single source of truth - With everyone creating their own version of truth, it is very important to define the certified version.

- Governance - To avoid lawlessness there has to be a strong governance structure and defined roles.

- Dataflow Workspace - This layer need to be created online in Power BI services only. This will contain the data source connectivity and the data transformations.

- Dataset Workspace - This will contain the imported data and the data model (relationship and cardinality). Basically these are .PBIX files developed offline but with blank pages.

- Reporting Workspace - This will contain the Reports only. These are .PBIX files, which will connect to the Power BI dataset instead of data source.

to ensure the credibility.

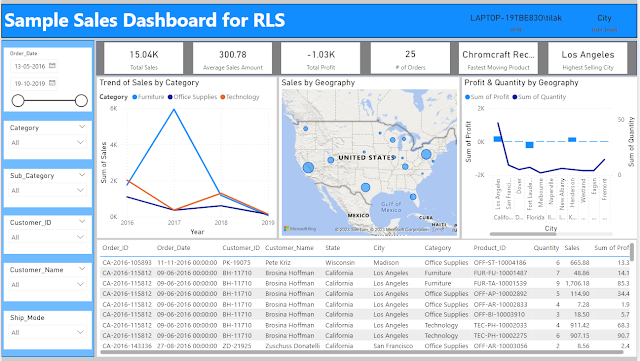

to ensure the credibility. In addition to this we need to maintain the RLS (Row Level Security) 🔏 data to ensure data level security.

- The Admin user to be used for admin related activities only such as creation / deletion of workspaces, adding other admins etc.

- The users who do not need to perform specific admin related activities, should not be with admin roles.

- Member roles can be leveraged for all other controls and deployments. They can even manage security for roles other than admin.

- The members should be responsible for all the content published to the larger audience. They should perform checks before final deployment.

- Contributors should not be allowed to update an app, that will do away with the benefit of having gate keeper and the standard of the content can be compromised.

- The access should be given to user groups rather than individual users, that way controlling the access will be easier and there will be people to backfill others.

- User 1 : BI Developer / Admin - Able to connect to the DB from Power BI desktop. RLS doesn’t apply. Practically they can do almost everything.

- User 2 : Data Analyst - Able to connect to the dataflow and dataset from Power BI Desktop.

- User 3 : BI Power User - Able to edit prebuilt published reports and create own’s.

- User 4 : BI Explorer - Able to personalize and share the prebuilt reports. The self-service is limited to to playing around with the prebuilt reports only.

- User 5 : BI Consumer - Able to view, slice and dice the reports with no self-service capability.